Introduction

Back in the good old days, when Git was not invented, database administrators will use a bash script to backup their MySQL databases periodically. This method is not true anymore because GitHub, GitLab and Bitbucket provide free plans and allow the programmer to push their latest files and sql dump into the cloud with a simple git commit and git push commands. These backups no longer take up disk space on the server and are done progressively, meaning only the changes are updated, and thus, you will not see multiple copies. As a programmer myself, I utilise GitHub for my files but still use the classic method of compressing my work files and databases using tar / mysqldump and rotate them every X days. I thought it was easier to quickly deploy these tar files into a spare server in case of downtime on the production side

Prerequisites

Using a script for db backup is easy because all you need is copying the bash script and scheduling it to cron job with crontab. Tested working with Ubuntu 18.04 LTS.

- A little knowledge of bash scripting helps.

- Understand how Linux crontab works.

- Basic Linux commands (mkdir, ls and rsync) so that you can check your backups existence.

- Generate SSH keys so that both production and backup server can connect without manual password entry.

Bash Script Backup

Backup MySQL Databases and Files

Before we start, let’s try to understand what files are we going to back up. In this tutorial, we are assuming the production server is running multiple live websites. Each website has files stored either in /var/www/html/web1 or /home/user/web2. Then each website will have its MySQL database with different credentials. We will generate one tar file for the working directory and one sql file from mysqldump for each website. Lastly, we can also compress backup file to *.sql.gz for big databases.

# Back up database mysqldump -u dbuser1 -pDBpassword1 website1_prod > $BACKUP_PATH/$SUBDIR/website1_prod-$(date +\%Y-\%m-\%d).sql # Compress file directory tar zcf $BACKUP_PATH/$SUBDIR/production-$(date +\%Y-\%m-\%d).tar.gz -C / var/www/example.com/production # or Compress file directory excluding certain sub-directories (e.g. doNotBackupMe) tar zcf $BACKUP_PATH/$SUBDIR/production-$(date +\%Y-\%m-\%d).tar.gz --exclude "public_html/doNotBackupMe" -C / var/www/example.com/production

How Many Days to Keep Backups?

This method involves keeping backups locally and then remote uploading to the backup server via rsync. There is finite disk space to consider on both servers. We will set number of days to 30 which is one month worth of backups, in this tutorial. As such, the 31st-day will automatically remove the 1st-day files, and we will be able to maintain 30 sets of backups for each website.

# Rotate backups where $KEEP=30 find $BACKUP_PATH/$SUBDIR/ -mtime +$KEEP -delete # Upload (mirror) backup directory to remote backup server rsync -a --delete $BACKUP_PATH/$SUBDIR -e 'ssh -p 21333' remote_user1@<backup_server_IP>:backups

Adding into a Bash Script

The mysql backup script requires a backup path to store all the mysql dump backups generated by the shell script. The script will produce a *.sql and *.tar.gz files appended with a timestamp for each website. For example, website1_prod-2021-06-07-05-10.sql and website1_files-2021-06-07.tar.gz

!/bin/sh # Keep 30 Days KEEP=30 # Local backup path BACKUP_PATH="/home/local_user1/backups" # Standard steps: SUBDIR="website1" # 1. backup database as *.sql (e.g. website1_prod-2021-06-07-05-10.sql) mysqldump -u dbuser1 -pDBpassword1 website1_DB > $BACKUP_PATH/$SUBDIR/website1_prod-$(date +\%Y-\%m-\%d).sql # 2. tar working directory (e.g. var/www/example.com/*) tar zcf $BACKUP_PATH/$SUBDIR/website1_files-$(date +\%Y-\%m-\%d).tar.gz -C / var/www/example.com/production # 3. rotate to keep last 30 days backups only find $BACKUP_PATH/$SUBDIR/ -mtime +$KEEP -delete # 4. rsync to remote backup server rsync -a --delete $BACKUP_PATH/$SUBDIR -e 'ssh -p 21333' remote_user1@<backup_server_IP>:backups # Another website with different database and file directory path (e.g. home/local_user1/*) SUBDIR="website2" mysqldump -u dbuser2 -pDBpassword2 website2_DB > $BACKUP_PATH/$SUBDIR/website2_prod-$(date +\%Y-\%m-\%d).sql # For this website, do not backup the 'doNotBackupMe' directory (e.g. contains log files) tar zcf $BACKUP_PATH/$SUBDIR/website2_files-$(date +\%Y-\%m-\%d).tar.gz --exclude "public_html/doNotBackupMe" -C / home/local_user1/example1.com/production find $BACKUP_PATH/$SUBDIR/* -mtime +$KEEP -delete rsync -a --delete $BACKUP_PATH/$SUBDIR -e 'ssh -p 21333' remote_user1@<backup_server_IP>:backups # Image directory without database SUBDIR="raw_images" tar zcf $BACKUP_PATH/$SUBDIR/json-$(date +\%Y-\%m-\%d).tar.gz -C / home/local_user1/raw_images find $BACKUP_PATH/$SUBDIR/* -mtime +$KEEP -delete rsync -a --delete $BACKUP_PATH/$SUBDIR -e 'ssh -p 21333' remote_user1@<backup_server_IP>:backups

You may ignore the mysqldump: [Warning] that says using mysql password on command line can be insecure.

Set Up a Cron Job

Linux automatically backup mysql database daily if we run our mysql-files-backup.sh script at 5.10 AM every day. To check your sh command, type $ whereis sh

dxxxxxxe@production-1:~$ crontab -l Edit this file to introduce tasks to be run by cron. # m h dom mon dow command 10 5 * * * /usr/bin/sh /home/user1/scripts/mysql-files-backup.sh

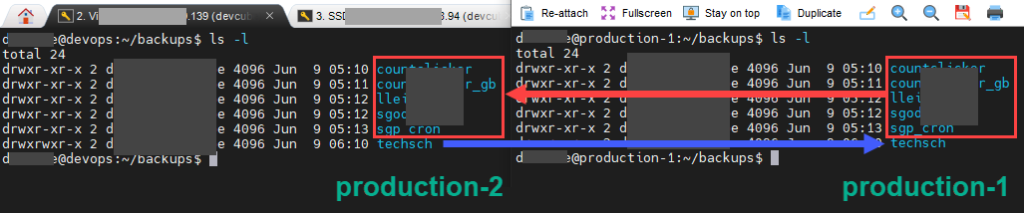

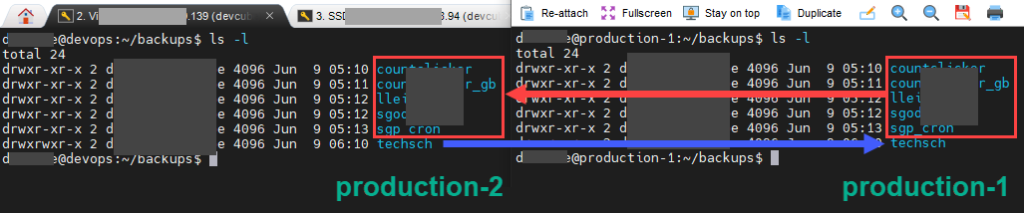

The End Result

The below example shows that backups are done daily from 1st June 2021 to 9th June 2021 for the TechSch website. The /backups is the main backup directory and sub-directory /backups/techsch is just one website. A second website could be /backups/website2

user1@devops:~/backups/techsch$ ls -l total 1817148 -rw-rw-r-- 1 user1 user1 3001934 Jun 1 23:01 techsch-2021-06-01.sql -rw-rw-r-- 1 user1 user1 3001916 Jun 2 06:10 techsch-2021-06-02.sql -rw-rw-r-- 1 user1 user1 2392008 Jun 3 06:10 techsch-2021-06-03.sql -rw-rw-r-- 1 user1 user1 2428698 Jun 4 06:10 techsch-2021-06-04.sql -rw-rw-r-- 1 user1 user1 2996366 Jun 5 06:10 techsch-2021-06-05.sql -rw-rw-r-- 1 user1 user1 2464445 Jun 6 06:10 techsch-2021-06-06.sql -rw-rw-r-- 1 user1 user1 3114968 Jun 7 06:10 techsch-2021-06-07.sql -rw-rw-r-- 1 user1 user1 2520462 Jun 8 06:10 techsch-2021-06-08.sql -rw-rw-r-- 1 user1 user1 3215740 Jun 9 06:10 techsch-2021-06-09.sql -rw-rw-r-- 1 user1 user1 203945726 Jun 1 23:02 techsch-2021-06-01.tar.gz -rw-rw-r-- 1 user1 user1 203942217 Jun 2 06:10 techsch-2021-06-02.tar.gz -rw-rw-r-- 1 user1 user1 203954796 Jun 3 06:10 techsch-2021-06-03.tar.gz -rw-rw-r-- 1 user1 user1 203952450 Jun 4 06:10 techsch-2021-06-04.tar.gz -rw-rw-r-- 1 user1 user1 203952089 Jun 5 06:10 techsch-2021-06-05.tar.gz -rw-rw-r-- 1 user1 user1 203956558 Jun 6 06:10 techsch-2021-06-06.tar.gz -rw-rw-r-- 1 user1 user1 203957656 Jun 7 06:10 techsch-2021-06-07.tar.gz -rw-rw-r-- 1 user1 user1 203955935 Jun 8 06:10 techsch-2021-06-08.tar.gz -rw-rw-r-- 1 user1 user1 203954500 Jun 9 06:10 techsch-2021-06-09.tar.gz

Backups are done locally on the production side and then mirrored to the backup server via rysnc. If you have two productions servers like me, put the backup script on both servers for mirrored backup. You can opt to remove the local copies after the sync is complete if the server is low on disk space, but these days, disk spaces go by GB and are cheap even for SSD or NVMe.

Conclusion

Although I like Git, I don’t use all the features, but I feel more at ease with additional server backups of my web files and databases. Once I had to span up a VPS from DigitalOcean and set up Nginx and DNS and untar my backups. Instantly, my website is back up running again. Of course, my backup server is a different provider from my production server. Don’t underestimate local backups because there was another time when I accidentally removed the entire web folder with rm -Rf /var/www. The local backup was easy retrieval to reinstate everything back. I had my backups in 3 locations: local production, remote backup server and Git. I feel very safe, do you?